mirror of

https://github.com/FEX-Emu/xxHash.git

synced 2025-01-31 19:53:05 +00:00

Let the Great Typo Hunt commence!

Work in progress. - Fix many spelling/grammar issues, primarily in comments - Remove most spaces before punctuation - Update XXH3 comment - Wrap most comments to 80 columns - Unify most comments to use the same style - Use hexadecimal in the xxhash spec - Update help messages to better match POSIX/GNU conventions - Use HTML escapes in README.md to avoid UTF-8 - Mark outdated benchmark/scores

This commit is contained in:

parent

22abeae0eb

commit

9eb91a3b53

101

README.md

101

README.md

@ -1,6 +1,7 @@

|

||||

xxHash - Extremely fast hash algorithm

|

||||

======================================

|

||||

|

||||

<!-- TODO: Update. -->

|

||||

xxHash is an Extremely fast Hash algorithm, running at RAM speed limits.

|

||||

It successfully completes the [SMHasher](http://code.google.com/p/smhasher/wiki/SMHasher) test suite

|

||||

which evaluates collision, dispersion and randomness qualities of hash functions.

|

||||

@ -20,21 +21,21 @@ The benchmark uses SMHasher speed test, compiled with Visual 2010 on a Windows S

|

||||

The reference system uses a Core 2 Duo @3GHz

|

||||

|

||||

|

||||

| Name | Speed | Quality | Author |

|

||||

|---------------|-------------|:-------:|-------------------|

|

||||

| [xxHash] | 5.4 GB/s | 10 | Y.C. |

|

||||

| MurmurHash 3a | 2.7 GB/s | 10 | Austin Appleby |

|

||||

| SBox | 1.4 GB/s | 9 | Bret Mulvey |

|

||||

| Lookup3 | 1.2 GB/s | 9 | Bob Jenkins |

|

||||

| CityHash64 | 1.05 GB/s | 10 | Pike & Alakuijala |

|

||||

| FNV | 0.55 GB/s | 5 | Fowler, Noll, Vo |

|

||||

| CRC32 | 0.43 GB/s † | 9 | |

|

||||

| MD5-32 | 0.33 GB/s | 10 | Ronald L.Rivest |

|

||||

| SHA1-32 | 0.28 GB/s | 10 | |

|

||||

| Name | Speed | Quality | Author |

|

||||

|---------------|--------------------|:-------:|-------------------|

|

||||

| [xxHash] | 5.4 GB/s | 10 | Y.C. |

|

||||

| MurmurHash 3a | 2.7 GB/s | 10 | Austin Appleby |

|

||||

| SBox | 1.4 GB/s | 9 | Bret Mulvey |

|

||||

| Lookup3 | 1.2 GB/s | 9 | Bob Jenkins |

|

||||

| CityHash64 | 1.05 GB/s | 10 | Pike & Alakuijala |

|

||||

| FNV | 0.55 GB/s | 5 | Fowler, Noll, Vo |

|

||||

| CRC32 | 0.43 GB/s † | 9 | |

|

||||

| MD5-32 | 0.33 GB/s | 10 | Ronald L.Rivest |

|

||||

| SHA1-32 | 0.28 GB/s | 10 | |

|

||||

|

||||

[xxHash]: http://www.xxhash.com

|

||||

|

||||

Note †: SMHasher's CRC32 implementation is known to be slow. Faster implementations exist.

|

||||

Note †: SMHasher's CRC32 implementation is known to be slow. Faster implementations exist.

|

||||

|

||||

Q.Score is a measure of quality of the hash function.

|

||||

It depends on successfully passing SMHasher test set.

|

||||

@ -48,13 +49,13 @@ Note however that 32-bit applications will still run faster using the 32-bit ver

|

||||

SMHasher speed test, compiled using GCC 4.8.2, on Linux Mint 64-bit.

|

||||

The reference system uses a Core i5-3340M @2.7GHz

|

||||

|

||||

| Version | Speed on 64-bit | Speed on 32-bit |

|

||||

| Version | Speed on 64-bit | Speed on 32-bit |

|

||||

|------------|------------------|------------------|

|

||||

| XXH64 | 13.8 GB/s | 1.9 GB/s |

|

||||

| XXH32 | 6.8 GB/s | 6.0 GB/s |

|

||||

|

||||

This project also includes a command line utility, named `xxhsum`, offering similar features as `md5sum`,

|

||||

thanks to [Takayuki Matsuoka](https://github.com/t-mat) contributions.

|

||||

This project also includes a command line utility, named `xxhsum`, offering similar features to `md5sum`,

|

||||

thanks to [Takayuki Matsuoka](https://github.com/t-mat)'s contributions.

|

||||

|

||||

|

||||

### License

|

||||

@ -65,61 +66,59 @@ The utility `xxhsum` is GPL licensed.

|

||||

|

||||

### New hash algorithms

|

||||

|

||||

Starting with `v0.7.0`, the library includes a new algorithm, named `XXH3`,

|

||||

able to generate 64 and 128-bits hashes.

|

||||

Starting with `v0.7.0`, the library includes a new algorithm named `XXH3`,

|

||||

which is able to generate 64 and 128-bit hashes.

|

||||

|

||||

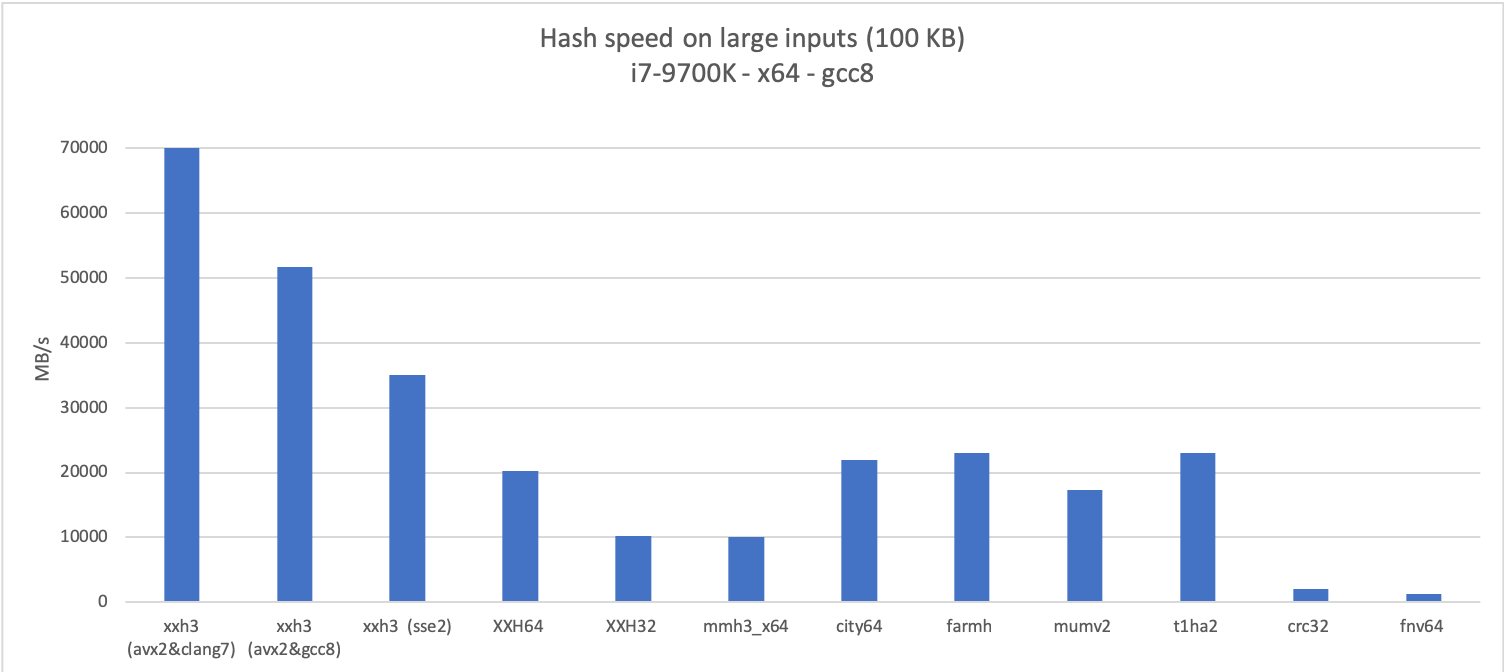

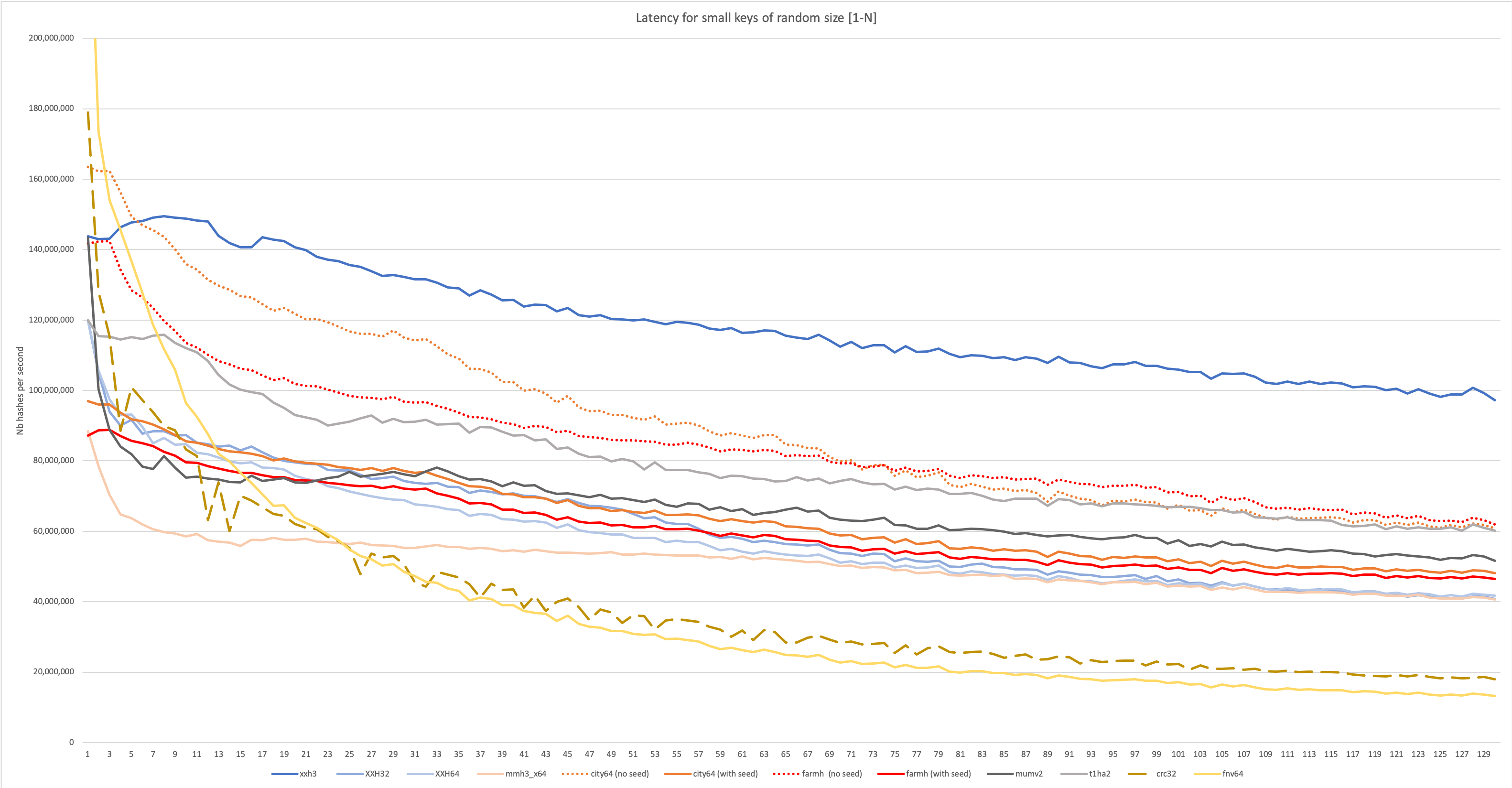

The new algorithm is much faster than its predecessors,

|

||||

for both long and small inputs,

|

||||

which can be observed in the following graphs :

|

||||

The new algorithm is much faster than its predecessors for both long and small inputs,

|

||||

which can be observed in the following graphs:

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

To access these new prototypes, one needs to unlock their declaration, using build the macro `XXH_STATIC_LINKING_ONLY`.

|

||||

To access these new prototypes, one needs to unlock their declaration, using the build macro `XXH_STATIC_LINKING_ONLY`.

|

||||

|

||||

The algorithm is currently in development, meaning its return values might still change in future versions.

|

||||

However, the implementation is stable, and can be used in production,

|

||||

typically for ephemeral data (produced and consumed in same session).

|

||||

`XXH3` return values will be finalized on reaching `v0.8.0`.

|

||||

However, the API is stable, and can be used in production, typically for ephemeral

|

||||

data (produced and consumed in same session).

|

||||

|

||||

`XXH3`'s return values will be finalized upon reaching `v0.8.0`.

|

||||

|

||||

|

||||

### Build modifiers

|

||||

|

||||

The following macros can be set at compilation time,

|

||||

they modify libxxhash behavior. They are all disabled by default.

|

||||

The following macros can be set at compilation time to modify libxxhash's behavior. They are all disabled by default.

|

||||

|

||||

- `XXH_INLINE_ALL` : Make all functions `inline`, with bodies directly included within `xxhash.h`.

|

||||

- `XXH_INLINE_ALL` : Make all functions `inline`, with implementations being directly included within `xxhash.h`.

|

||||

Inlining functions is beneficial for speed on small keys.

|

||||

It's _extremely effective_ when key length is expressed as _a compile time constant_,

|

||||

with performance improvements observed in the +200% range .

|

||||

with performance improvements being observed in the +200% range .

|

||||

See [this article](https://fastcompression.blogspot.com/2018/03/xxhash-for-small-keys-impressive-power.html) for details.

|

||||

Note: there is no need to compile an `xxhash.o` object file in this case.

|

||||

- `XXH_NO_INLINE_HINTS` : By default, xxHash uses tricks like `__attribute__((always_inline))` and `__forceinline` to try and improve performance at the cost of code size. Defining this to 1 will mark all internal functions as `static`, allowing the compiler to decide whether to inline a function or not. This is very useful when optimizing for the smallest binary size, and it is automatically defined when compiling with `-O0`, `-Os`, `-Oz`, or `-fno-inline` on GCC and Clang. This may also increase performance depending on the compiler and the architecture.

|

||||

- `XXH_REROLL` : reduce size of generated code. Impact on performance vary, depending on platform and algorithm.

|

||||

- `XXH_NO_INLINE_HINTS`: By default, xxHash uses tricks like `__attribute__((always_inline))` and `__forceinline` to try and improve performance at the cost of code size. Defining this to 1 will mark all internal functions as `static`, allowing the compiler to decide whether to inline a function or not. This is very useful when optimizing for the smallest binary size, and it is automatically defined when compiling with `-O0`, `-Os`, `-Oz`, or `-fno-inline` on GCC and Clang. This may also increase performance depending on the compiler and the architecture.

|

||||

- `XXH_REROLL`: Reduces the size of the generated code by not unrolling some loops. Impact on performance may vary, depending on the platform and the algorithm.

|

||||

- `XXH_ACCEPT_NULL_INPUT_POINTER` : if set to `1`, when input is a `NULL` pointer,

|

||||

xxhash result is the same as a zero-length input

|

||||

xxHash'd result is the same as a zero-length input

|

||||

(instead of a dereference segfault).

|

||||

Adds one branch at the beginning of the hash.

|

||||

- `XXH_FORCE_MEMORY_ACCESS` : default method `0` uses a portable `memcpy()` notation.

|

||||

- `XXH_FORCE_MEMORY_ACCESS` : The default method `0` uses a portable `memcpy()` notation.

|

||||

Method `1` uses a gcc-specific `packed` attribute, which can provide better performance for some targets.

|

||||

Method `2` forces unaligned reads, which is not standard compliant, but might sometimes be the only way to extract better read performance.

|

||||

Method `2` forces unaligned reads, which is not standards compliant, but might sometimes be the only way to extract better read performance.

|

||||

Method `3` uses a byteshift operation, which is best for old compilers which don't inline `memcpy()` or big-endian systems without a byteswap instruction

|

||||

- `XXH_CPU_LITTLE_ENDIAN` : by default, endianess is determined at compile time.

|

||||

It's possible to skip auto-detection and force format to little-endian, by setting this macro to 1.

|

||||

- `XXH_CPU_LITTLE_ENDIAN`: By default, endianess is determined at compile time.

|

||||

It's possible to skip auto-detection and force format to little-endian, by setting this macro to 1.

|

||||

Setting it to 0 forces big-endian.

|

||||

- `XXH_PRIVATE_API` : same impact as `XXH_INLINE_ALL`.

|

||||

Name underlines that XXH_* symbols will not be published.

|

||||

- `XXH_NAMESPACE` : prefix all symbols with the value of `XXH_NAMESPACE`.

|

||||

- `XXH_PRIVATE_API`: same impact as `XXH_INLINE_ALL`.

|

||||

Name underlines that XXH_* symbols will not be exported.

|

||||

- `XXH_NAMESPACE`: Prefixes all symbols with the value of `XXH_NAMESPACE`.

|

||||

Useful to evade symbol naming collisions,

|

||||

in case of multiple inclusions of xxHash source code.

|

||||

Client applications can still use regular function name,

|

||||

symbols are automatically translated through `xxhash.h`.

|

||||

- `XXH_STATIC_LINKING_ONLY` : gives access to state declaration for static allocation.

|

||||

Incompatible with dynamic linking, due to risks of ABI changes.

|

||||

- `XXH_NO_LONG_LONG` : removes support for XXH64,

|

||||

for targets without 64-bit support.

|

||||

- `XXH_IMPORT` : MSVC specific : should only be defined for dynamic linking, it prevents linkage errors.

|

||||

in case of multiple inclusions of xxHash's source code.

|

||||

Client applications can still use the regular function name,

|

||||

as symbols are automatically translated through `xxhash.h`.

|

||||

- `XXH_STATIC_LINKING_ONLY`: gives access to the state declaration for static allocation.

|

||||

Incompatible with dynamic linking, due to risks of ABI changes.

|

||||

- `XXH_NO_LONG_LONG`: removes support for XXH3 and XXH64 for targets without 64-bit support.

|

||||

- `XXH_IMPORT`: MSVC specific: should only be defined for dynamic linking, as it prevents linkage errors.

|

||||

|

||||

|

||||

### Building xxHash - Using vcpkg

|

||||

@ -137,7 +136,7 @@ The xxHash port in vcpkg is kept up to date by Microsoft team members and commun

|

||||

|

||||

### Example

|

||||

|

||||

Calling xxhash 64-bit variant from a C program :

|

||||

Calling xxhash 64-bit variant from a C program:

|

||||

|

||||

```C

|

||||

#include "xxhash.h"

|

||||

@ -147,7 +146,7 @@ Calling xxhash 64-bit variant from a C program :

|

||||

}

|

||||

```

|

||||

|

||||

Using streaming variant is more involved, but makes it possible to provide data incrementally :

|

||||

Using streaming variant is more involved, but makes it possible to provide data incrementally:

|

||||

```C

|

||||

#include "stdlib.h" /* abort() */

|

||||

#include "xxhash.h"

|

||||

@ -190,9 +189,9 @@ XXH64_hash_t calcul_hash_streaming(FileHandler fh)

|

||||

|

||||

### Other programming languages

|

||||

|

||||

Beyond the C reference version,

|

||||

xxHash is also available in many programming languages,

|

||||

thanks to great contributors.

|

||||

Aside from the C reference version,

|

||||

xxHash is also available in many different programming languages,

|

||||

thanks to many great contributors.

|

||||

They are [listed here](http://www.xxhash.com/#other-languages).

|

||||

|

||||

|

||||

|

||||

@ -31,25 +31,25 @@ Table of Contents

|

||||

Introduction

|

||||

----------------

|

||||

|

||||

This document describes the xxHash digest algorithm, for both 32 and 64 variants, named `XXH32` and `XXH64`. The algorithm takes as input a message of arbitrary length and an optional seed value, it then produces an output of 32 or 64-bit as "fingerprint" or "digest".

|

||||

This document describes the xxHash digest algorithm for both 32-bit and 64-bit variants, named `XXH32` and `XXH64`. The algorithm takes an input a message of arbitrary length and an optional seed value, then produces an output of 32 or 64-bit as "fingerprint" or "digest".

|

||||

|

||||

xxHash is primarily designed for speed. It is labelled non-cryptographic, and is not meant to avoid intentional collisions (same digest for 2 different messages), or to prevent producing a message with predefined digest.

|

||||

xxHash is primarily designed for speed. It is labeled non-cryptographic, and is not meant to avoid intentional collisions (same digest for 2 different messages), or to prevent producing a message with a predefined digest.

|

||||

|

||||

XXH32 is designed to be fast on 32-bits machines.

|

||||

XXH64 is designed to be fast on 64-bits machines.

|

||||

XXH32 is designed to be fast on 32-bit machines.

|

||||

XXH64 is designed to be fast on 64-bit machines.

|

||||

Both variants produce different output.

|

||||

However, a given variant shall produce exactly the same output, irrespective of the cpu / os used. In particular, the result remains identical whatever the endianness and width of the cpu.

|

||||

However, a given variant shall produce exactly the same output, irrespective of the cpu / os used. In particular, the result remains identical whatever the endianness and width of the cpu is.

|

||||

|

||||

### Operation notations

|

||||

|

||||

All operations are performed modulo {32,64} bits. Arithmetic overflows are expected.

|

||||

`XXH32` uses 32-bit modular operations. `XXH64` uses 64-bit modular operations.

|

||||

|

||||

- `+` : denote modular addition

|

||||

- `*` : denote modular multiplication

|

||||

- `X <<< s` : denote the value obtained by circularly shifting (rotating) `X` left by `s` bit positions.

|

||||

- `X >> s` : denote the value obtained by shifting `X` right by s bit positions. Upper `s` bits become `0`.

|

||||

- `X xor Y` : denote the bit-wise XOR of `X` and `Y` (same width).

|

||||

- `+`: denotes modular addition

|

||||

- `*`: denotes modular multiplication

|

||||

- `X <<< s`: denotes the value obtained by circularly shifting (rotating) `X` left by `s` bit positions.

|

||||

- `X >> s`: denotes the value obtained by shifting `X` right by s bit positions. Upper `s` bits become `0`.

|

||||

- `X xor Y`: denotes the bit-wise XOR of `X` and `Y` (same width).

|

||||

|

||||

|

||||

XXH32 Algorithm Description

|

||||

@ -61,13 +61,13 @@ We begin by supposing that we have a message of any length `L` as input, and tha

|

||||

|

||||

The algorithm collect and transform input in _stripes_ of 16 bytes. The transforms are stored inside 4 "accumulators", each one storing an unsigned 32-bit value. Each accumulator can be processed independently in parallel, speeding up processing for cpu with multiple execution units.

|

||||

|

||||

The algorithm uses 32-bits addition, multiplication, rotate, shift and xor operations. Many operations require some 32-bits prime number constants, all defined below :

|

||||

The algorithm uses 32-bits addition, multiplication, rotate, shift and xor operations. Many operations require some 32-bits prime number constants, all defined below:

|

||||

|

||||

static const u32 PRIME32_1 = 2654435761U; // 0b10011110001101110111100110110001

|

||||

static const u32 PRIME32_2 = 2246822519U; // 0b10000101111010111100101001110111

|

||||

static const u32 PRIME32_3 = 3266489917U; // 0b11000010101100101010111000111101

|

||||

static const u32 PRIME32_4 = 668265263U; // 0b00100111110101001110101100101111

|

||||

static const u32 PRIME32_5 = 374761393U; // 0b00010110010101100110011110110001

|

||||

static const u32 PRIME32_1 = 0x9E3779B1U; // 0b10011110001101110111100110110001

|

||||

static const u32 PRIME32_2 = 0x85EBCA77U; // 0b10000101111010111100101001110111

|

||||

static const u32 PRIME32_3 = 0xC2B2AE3DU; // 0b11000010101100101010111000111101

|

||||

static const u32 PRIME32_4 = 0x27D4EB2FU; // 0b00100111110101001110101100101111

|

||||

static const u32 PRIME32_5 = 0x165667B1U; // 0b00010110010101100110011110110001

|

||||

|

||||

These constants are prime numbers, and feature a good mix of bits 1 and 0, neither too regular, nor too dissymmetric. These properties help dispersion capabilities.

|

||||

|

||||

@ -80,11 +80,11 @@ Each accumulator gets an initial value based on optional `seed` input. Since the

|

||||

u32 acc3 = seed + 0;

|

||||

u32 acc4 = seed - PRIME32_1;

|

||||

|

||||

#### Special case : input is less than 16 bytes

|

||||

#### Special case: input is less than 16 bytes

|

||||

|

||||

When input is too small (< 16 bytes), the algorithm will not process any stripe. Consequently, it will not make use of parallel accumulators.

|

||||

When the input is too small (< 16 bytes), the algorithm will not process any stripes. Consequently, it will not make use of parallel accumulators.

|

||||

|

||||

In which case, a simplified initialization is performed, using a single accumulator :

|

||||

In this case, a simplified initialization is performed, using a single accumulator:

|

||||

|

||||

u32 acc = seed + PRIME32_5;

|

||||

|

||||

@ -106,12 +106,12 @@ For each {lane, accumulator}, the update process is called a _round_, and applie

|

||||

|

||||

This shuffles the bits so that any bit from input _lane_ impacts several bits in output _accumulator_. All operations are performed modulo 2^32.

|

||||

|

||||

Input is consumed one full stripe at a time. Step 2 is looped as many times as necessary to consume the whole input, except the last remaining bytes which cannot form a stripe (< 16 bytes).

|

||||

Input is consumed one full stripe at a time. Step 2 is looped as many times as necessary to consume the whole input, except for the last remaining bytes which cannot form a stripe (< 16 bytes).

|

||||

When that happens, move to step 3.

|

||||

|

||||

### Step 3. Accumulator convergence

|

||||

|

||||

All 4 lane accumulators from previous steps are merged to produce a single remaining accumulator of same width (32-bit). The associated formula is as follows :

|

||||

All 4 lane accumulators from the previous steps are merged to produce a single remaining accumulator of the same width (32-bit). The associated formula is as follows:

|

||||

|

||||

acc = (acc1 <<< 1) + (acc2 <<< 7) + (acc3 <<< 12) + (acc4 <<< 18);

|

||||

|

||||

@ -126,7 +126,7 @@ Note that, if input length is so large that it requires more than 32-bits, only

|

||||

### Step 5. Consume remaining input

|

||||

|

||||

There may be up to 15 bytes remaining to consume from the input.

|

||||

The final stage will digest them according to following pseudo-code :

|

||||

The final stage will digest them according to following pseudo-code:

|

||||

|

||||

while (remainingLength >= 4) {

|

||||

lane = read_32bit_little_endian(input_ptr);

|

||||

@ -166,17 +166,17 @@ XXH64 Algorithm Description

|

||||

|

||||

### Overview

|

||||

|

||||

`XXH64` algorithm structure is very similar to `XXH32` one. The major difference is that `XXH64` uses 64-bit arithmetic, speeding up memory transfer for 64-bit compliant systems, but also relying on cpu capability to efficiently perform 64-bit operations.

|

||||

`XXH64`'s algorithm structure is very similar to `XXH32` one. The major difference is that `XXH64` uses 64-bit arithmetic, speeding up memory transfer for 64-bit compliant systems, but also relying on cpu capability to efficiently perform 64-bit operations.

|

||||

|

||||

The algorithm collects and transforms input in _stripes_ of 32 bytes. The transforms are stored inside 4 "accumulators", each one storing an unsigned 64-bit value. Each accumulator can be processed independently in parallel, speeding up processing for cpu with multiple execution units.

|

||||

|

||||

The algorithm uses 64-bit addition, multiplication, rotate, shift and xor operations. Many operations require some 64-bit prime number constants, all defined below :

|

||||

The algorithm uses 64-bit addition, multiplication, rotate, shift and xor operations. Many operations require some 64-bit prime number constants, all defined below:

|

||||

|

||||

static const u64 PRIME64_1 = 11400714785074694791ULL; // 0b1001111000110111011110011011000110000101111010111100101010000111

|

||||

static const u64 PRIME64_2 = 14029467366897019727ULL; // 0b1100001010110010101011100011110100100111110101001110101101001111

|

||||

static const u64 PRIME64_3 = 1609587929392839161ULL; // 0b0001011001010110011001111011000110011110001101110111100111111001

|

||||

static const u64 PRIME64_4 = 9650029242287828579ULL; // 0b1000010111101011110010100111011111000010101100101010111001100011

|

||||

static const u64 PRIME64_5 = 2870177450012600261ULL; // 0b0010011111010100111010110010111100010110010101100110011111000101

|

||||

static const u64 PRIME64_1 = 0x9E3779B185EBCA87ULL; // 0b1001111000110111011110011011000110000101111010111100101010000111

|

||||

static const u64 PRIME64_2 = 0xC2B2AE3D27D4EB4FULL; // 0b1100001010110010101011100011110100100111110101001110101101001111

|

||||

static const u64 PRIME64_3 = 0x165667B19E3779F9ULL; // 0b0001011001010110011001111011000110011110001101110111100111111001

|

||||

static const u64 PRIME64_4 = 0x85EBCA77C2B2AE63ULL; // 0b1000010111101011110010100111011111000010101100101010111001100011

|

||||

static const u64 PRIME64_5 = 0x27D4EB2F165667C5ULL; // 0b0010011111010100111010110010111100010110010101100110011111000101

|

||||

|

||||

These constants are prime numbers, and feature a good mix of bits 1 and 0, neither too regular, nor too dissymmetric. These properties help dispersion capabilities.

|

||||

|

||||

@ -189,11 +189,11 @@ Each accumulator gets an initial value based on optional `seed` input. Since the

|

||||

u64 acc3 = seed + 0;

|

||||

u64 acc4 = seed - PRIME64_1;

|

||||

|

||||

#### Special case : input is less than 32 bytes

|

||||

#### Special case: input is less than 32 bytes

|

||||

|

||||

When input is too small (< 32 bytes), the algorithm will not process any stripe. Consequently, it will not make use of parallel accumulators.

|

||||

When the input is too small (< 32 bytes), the algorithm will not process any stripes. Consequently, it will not make use of parallel accumulators.

|

||||

|

||||

In which case, a simplified initialization is performed, using a single accumulator :

|

||||

In this case, a simplified initialization is performed, using a single accumulator:

|

||||

|

||||

u64 acc = seed + PRIME64_5;

|

||||

|

||||

@ -216,14 +216,14 @@ For each {lane, accumulator}, the update process is called a _round_, and applie

|

||||

|

||||

This shuffles the bits so that any bit from input _lane_ impacts several bits in output _accumulator_. All operations are performed modulo 2^64.

|

||||

|

||||

Input is consumed one full stripe at a time. Step 2 is looped as many times as necessary to consume the whole input, except the last remaining bytes which cannot form a stripe (< 32 bytes).

|

||||

Input is consumed one full stripe at a time. Step 2 is looped as many times as necessary to consume the whole input, except for the last remaining bytes which cannot form a stripe (< 32 bytes).

|

||||

When that happens, move to step 3.

|

||||

|

||||

### Step 3. Accumulator convergence

|

||||

|

||||

All 4 lane accumulators from previous steps are merged to produce a single remaining accumulator of same width (64-bit). The associated formula is as follows.

|

||||

|

||||

Note that accumulator convergence is more complex than 32-bit variant, and requires to define another function called _mergeAccumulator()_ :

|

||||

Note that accumulator convergence is more complex than 32-bit variant, and requires to define another function called _mergeAccumulator()_:

|

||||

|

||||

mergeAccumulator(acc,accN):

|

||||

acc = acc xor round(0, accN);

|

||||

@ -247,7 +247,7 @@ The input total length is presumed known at this stage. This step is just about

|

||||

### Step 5. Consume remaining input

|

||||

|

||||

There may be up to 31 bytes remaining to consume from the input.

|

||||

The final stage will digest them according to following pseudo-code :

|

||||

The final stage will digest them according to following pseudo-code:

|

||||

|

||||

while (remainingLength >= 8) {

|

||||

lane = read_64bit_little_endian(input_ptr);

|

||||

@ -299,18 +299,19 @@ The algorithm allows input to be streamed and processed in multiple steps. In su

|

||||

|

||||

On 64-bit systems, the 64-bit variant `XXH64` is generally faster to compute, so it is a recommended variant, even when only 32-bit are needed.

|

||||

|

||||

On 32-bit systems though, positions are reversed : `XXH64` performance is reduced, due to its usage of 64-bit arithmetic. `XXH32` becomes a faster variant.

|

||||

On 32-bit systems though, positions are reversed: `XXH64` performance is reduced, due to its usage of 64-bit arithmetic. `XXH32` becomes a faster variant.

|

||||

|

||||

|

||||

Reference Implementation

|

||||

----------------------------------------

|

||||

|

||||

A reference library written in C is available at http://www.xxhash.com .

|

||||

A reference library written in C is available at http://www.xxhash.com.

|

||||

The web page also links to multiple other implementations written in many different languages.

|

||||

It links to the [github project page](https://github.com/Cyan4973/xxHash) where an [issue board](https://github.com/Cyan4973/xxHash/issues) can be used for further public discussions on the topic.

|

||||

|

||||

|

||||

Version changes

|

||||

--------------------

|

||||

v0.1.1 : added a note on rationale for selection of constants

|

||||

v0.1.0 : initial release

|

||||

v0.7.3: Minor fixes

|

||||

v0.1.1: added a note on rationale for selection of constants

|

||||

v0.1.0: initial release

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

/*

|

||||

* Hash benchmark module

|

||||

* Part of xxHash project

|

||||

* Part of the xxHash project

|

||||

* Copyright (C) 2019-present, Yann Collet

|

||||

*

|

||||

* GPL v2 License

|

||||

@ -19,9 +19,9 @@

|

||||

* with this program; if not, write to the Free Software Foundation, Inc.,

|

||||

* 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA.

|

||||

*

|

||||

* You can contact the author at :

|

||||

* - xxHash homepage : http://www.xxhash.com

|

||||

* - xxHash source repository : https://github.com/Cyan4973/xxHash

|

||||

* You can contact the author at:

|

||||

* - xxHash homepage: http://www.xxhash.com

|

||||

* - xxHash source repository: https://github.com/Cyan4973/xxHash

|

||||

*/

|

||||

|

||||

/* benchmark hash functions */

|

||||

@ -50,9 +50,10 @@ static void initBuffer(void* buffer, size_t size)

|

||||

|

||||

typedef size_t (*sizeFunction_f)(size_t targetSize);

|

||||

|

||||

/* bench_hash_internal() :

|

||||

* benchmark hashfn repeateadly over single input of size `size`

|

||||

* return : nb of hashes per second

|

||||

/*

|

||||

* bench_hash_internal():

|

||||

* Benchmarks hashfn repeateadly over single input of size `size`

|

||||

* return: nb of hashes per second

|

||||

*/

|

||||

static double

|

||||

bench_hash_internal(BMK_benchFn_t hashfn, void* payload,

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

/*

|

||||

* Hash benchmark module

|

||||

* Part of xxHash project

|

||||

* Part of the xxHash project

|

||||

* Copyright (C) 2019-present, Yann Collet

|

||||

*

|

||||

* GPL v2 License

|

||||

@ -19,9 +19,9 @@

|

||||

* with this program; if not, write to the Free Software Foundation, Inc.,

|

||||

* 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA.

|

||||

*

|

||||

* You can contact the author at :

|

||||

* - xxHash homepage : http://www.xxhash.com

|

||||

* - xxHash source repository : https://github.com/Cyan4973/xxHash

|

||||

* You can contact the author at:

|

||||

* - xxHash homepage: http://www.xxhash.com

|

||||

* - xxHash source repository: https://github.com/Cyan4973/xxHash

|

||||

*/

|

||||

|

||||

|

||||

@ -46,10 +46,12 @@ typedef enum { BMK_fixedSize, /* hash always `size` bytes */

|

||||

BMK_randomSize, /* hash a random nb of bytes, between 1 and `size` (inclusive) */

|

||||

} BMK_sizeMode;

|

||||

|

||||

/* bench_hash() :

|

||||

* returns speed expressed as nb hashes per second.

|

||||

* total_time_ms : time spent benchmarking the hash function with given parameters

|

||||

* iter_time_ms : time spent for one round. If multiple rounds are run, bench_hash() will report the speed of best round.

|

||||

/*

|

||||

* bench_hash():

|

||||

* Returns speed expressed as nb hashes per second.

|

||||

* total_time_ms: time spent benchmarking the hash function with given parameters

|

||||

* iter_time_ms: time spent for one round. If multiple rounds are run,

|

||||

* bench_hash() will report the speed of best round.

|

||||

*/

|

||||

double bench_hash(BMK_benchFn_t hashfn,

|

||||

BMK_benchMode benchMode,

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

/*

|

||||

* CSV Display module for the hash benchmark program

|

||||

* Part of xxHash project

|

||||

* Part of the xxHash project

|

||||

* Copyright (C) 2019-present, Yann Collet

|

||||

*

|

||||

* GPL v2 License

|

||||

@ -66,7 +66,7 @@ void bench_largeInput(Bench_Entry const* hashDescTable, int nbHashes, int minlog

|

||||

|

||||

|

||||

|

||||

/* === benchmark small input === */

|

||||

/* === Benchmark small inputs === */

|

||||

|

||||

#define BENCH_SMALL_ITER_MS 170

|

||||

#define BENCH_SMALL_TOTAL_MS 490

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

/*

|

||||

* CSV Display module for the hash benchmark program

|

||||

* Part of xxHash project

|

||||

* Part of the xxHash project

|

||||

* Copyright (C) 2019-present, Yann Collet

|

||||

*

|

||||

* GPL v2 License

|

||||

|

||||

@ -42,9 +42,10 @@

|

||||

#include <assert.h>

|

||||

|

||||

|

||||

/*! readIntFromChar() :

|

||||

* allows and interprets K, KB, KiB, M, MB and MiB suffix.

|

||||

* Will also modify `*stringPtr`, advancing it to position where it stopped reading.

|

||||

/*!

|

||||

* readIntFromChar():

|

||||

* Allows and interprets K, KB, KiB, M, MB and MiB suffix.

|

||||

* Will also modify `*stringPtr`, advancing it to position where it stopped reading.

|

||||

*/

|

||||

static int readIntFromChar(const char** stringPtr)

|

||||

{

|

||||

@ -72,25 +73,30 @@ static int readIntFromChar(const char** stringPtr)

|

||||

}

|

||||

|

||||

|

||||

/** longCommand() :

|

||||

* check if string is the same as longCommand.

|

||||

* If yes, @return 1 and advances *stringPtr to the position which immediately follows longCommand.

|

||||

* @return 0 and doesn't modify *stringPtr otherwise.

|

||||

/**

|

||||

* longCommand():

|

||||

* Checks if string is the same as longCommand.

|

||||

* If yes, @return 1, otherwise @return 0

|

||||

*/

|

||||

static int isCommand(const char* stringPtr, const char* longCommand)

|

||||

static int isCommand(const char* string, const char* longCommand)

|

||||

{

|

||||

assert(string);

|

||||

assert(longCommand);

|

||||

size_t const comSize = strlen(longCommand);

|

||||

assert(stringPtr); assert(longCommand);

|

||||

return !strncmp(stringPtr, longCommand, comSize);

|

||||

return !strncmp(string, longCommand, comSize);

|

||||

}

|

||||

|

||||

/** longCommandWArg() :

|

||||

* check if *stringPtr is the same as longCommand.

|

||||

* If yes, @return 1 and advances *stringPtr to the position which immediately follows longCommand.

|

||||

/*

|

||||

* longCommandWArg():

|

||||

* Checks if *stringPtr is the same as longCommand.

|

||||

* If yes, @return 1 and advances *stringPtr to the position which immediately

|

||||

* follows longCommand.

|

||||

* @return 0 and doesn't modify *stringPtr otherwise.

|

||||

*/

|

||||

static int longCommandWArg(const char** stringPtr, const char* longCommand)

|

||||

{

|

||||

assert(stringPtr);

|

||||

assert(longCommand);

|

||||

size_t const comSize = strlen(longCommand);

|

||||

int const result = isCommand(*stringPtr, longCommand);

|

||||

if (result) *stringPtr += comSize;

|

||||

@ -142,14 +148,16 @@ static int hashID(const char* hname)

|

||||

|

||||

static int help(const char* exename)

|

||||

{

|

||||

printf("usage : %s [options] [hash] \n\n", exename);

|

||||

printf("Usage: %s [options]... [hash]\n", exename);

|

||||

printf("Runs various benchmarks at various lengths for the listed hash functions\n");

|

||||

printf("and outputs them in a CSV format.\n\n");

|

||||

printf("Options: \n");

|

||||

printf("--list : name available hash algorithms and exit \n");

|

||||

printf("--mins=# : starting length for small size bench (default:%i) \n", SMALL_SIZE_MIN_DEFAULT);

|

||||

printf("--maxs=# : end length for small size bench (default:%i) \n", SMALL_SIZE_MAX_DEFAULT);

|

||||

printf("--minl=# : starting log2(length) for large size bench (default:%i) \n", LARGE_SIZELOG_MIN_DEFAULT);

|

||||

printf("--maxl=# : end log2(length) for large size bench (default:%i) \n", LARGE_SIZELOG_MAX_DEFAULT);

|

||||

printf("[hash] : is optional, bench all available hashes if not provided \n");

|

||||

printf(" --list Name available hash algorithms and exit \n");

|

||||

printf(" --mins=LEN Starting length for small size bench (default: %i) \n", SMALL_SIZE_MIN_DEFAULT);

|

||||

printf(" --maxs=LEN End length for small size bench (default: %i) \n", SMALL_SIZE_MAX_DEFAULT);

|

||||

printf(" --minl=LEN Starting log2(length) for large size bench (default: %i) \n", LARGE_SIZELOG_MIN_DEFAULT);

|

||||

printf(" --maxl=LEN End log2(length) for large size bench (default: %i) \n", LARGE_SIZELOG_MAX_DEFAULT);

|

||||

printf(" [hash] Optional, bench all available hashes if not provided \n");

|

||||

return 0;

|

||||

}

|

||||

|

||||

@ -180,17 +188,21 @@ int main(int argc, const char** argv)

|

||||

if (longCommandWArg(arg, "--maxl=")) { largeTest_log_max = readIntFromChar(arg); continue; }

|

||||

if (longCommandWArg(arg, "--mins=")) { smallTest_size_min = (size_t)readIntFromChar(arg); continue; }

|

||||

if (longCommandWArg(arg, "--maxs=")) { smallTest_size_max = (size_t)readIntFromChar(arg); continue; }

|

||||

/* not a command : must be a hash name */

|

||||

/* not a command: must be a hash name */

|

||||

hashNb = hashID(*arg);

|

||||

if (hashNb >= 0) {

|

||||

nb_h_test = 1;

|

||||

} else {

|

||||

/* not a hash name : error */

|

||||

/* not a hash name: error */

|

||||

return badusage(exename);

|

||||

}

|

||||

}

|

||||

|

||||

if (hashNb + nb_h_test > NB_HASHES) { printf("wrong hash selection \n"); return 1; } /* border case (requires (mis)using hidden command `--n=#`) */

|

||||

/* border case (requires (mis)using hidden command `--n=#`) */

|

||||

if (hashNb + nb_h_test > NB_HASHES) {

|

||||

printf("wrong hash selection \n");

|

||||

return 1;

|

||||

}

|

||||

|

||||

printf(" === benchmarking %i hash functions === \n", nb_h_test);

|

||||

if (largeTest_log_max >= largeTest_log_min) {

|

||||

|

||||

@ -9,7 +9,7 @@ By default, it will generate 24 billion of 64-bit hashes,

|

||||

requiring __192 GB of RAM__ for their storage.

|

||||

The number of hashes can be modified using command `--nbh=`.

|

||||

Be aware that testing the collision ratio of 64-bit hashes

|

||||

requires a very large amount of hashes (several billions) for meaningful measurements.

|

||||

requires a very large amount of hashes (several billion) for meaningful measurements.

|

||||

|

||||

To reduce RAM usage, an optional filter can be requested, with `--filter`.

|

||||

It reduces the nb of candidates to analyze, hence associated RAM budget.

|

||||

@ -22,9 +22,9 @@ It also doesn't allow advanced analysis of partial bitfields,

|

||||

since most hashes will be discarded and not stored.

|

||||

|

||||

When using the filter, the RAM budget consists of the filter and a list of candidates,

|

||||

which will be a fraction of original hash list.

|

||||

Using default settings (24 billions hashes, 32 GB filter),

|

||||

the number of potential candidates should be reduced to less than 2 billions,

|

||||

which will be a fraction of the original hash list.

|

||||

Using default settings (24 billion hashes, 32 GB filter),

|

||||

the number of potential candidates should be reduced to less than 2 billion,

|

||||

requiring ~14 GB for their storage.

|

||||

Such a result also depends on hash algorithm's efficiency.

|

||||

The number of effective candidates is likely to be lower, at ~ 1 billion,

|

||||

@ -37,26 +37,26 @@ For the default test, the expected "optimal" collision rate for a 64-bit hash fu

|

||||

make

|

||||

```

|

||||

|

||||

Note : the code is a mix of C99 and C++14,

|

||||

Note: the code is a mix of C99 and C++14,

|

||||

it's not compatible with a C90-only compiler.

|

||||

|

||||

#### Build modifier

|

||||

|

||||

- `SLAB5` : use alternative pattern generator, friendlier for weak hash algorithms

|

||||

- `POOL_MT` : if `=0`, disable multi-treading code (enabled by default)

|

||||

- `SLAB5`: use alternative pattern generator, friendlier for weak hash algorithms

|

||||

- `POOL_MT`: if `=0`, disable multi-threading code (enabled by default)

|

||||

|

||||

#### How to integrate any hash in the tester

|

||||

|

||||

The build script is expecting to compile files found in `./allcodecs`.

|

||||

The build script will compile files found in `./allcodecs`.

|

||||

Put the source code here.

|

||||

This also works if the hash is a single `*.h` file.

|

||||

|

||||

The glue happens in `hashes.h`.

|

||||

In this file, there are 2 sections :

|

||||

- Add the required `#include "header.h"`, and create a wrapper,

|

||||

In this file, there are 2 sections:

|

||||

- Adds the required `#include "header.h"`, and creates a wrapper

|

||||

to respect the format expected by the function pointer.

|

||||

- Add the wrapper, along with the name and an indication of the output width,

|

||||

to the table, at the end of `hashed.h`

|

||||

- Adds the wrapper, along with the name and an indication of the output width,

|

||||

to the table, at the end of `hashes.h`

|

||||

|

||||

Build with `make`. Locate your new hash with `./collisionsTest -h`,

|

||||

it should be listed.

|

||||

@ -67,13 +67,13 @@ it should be listed.

|

||||

```

|

||||

usage: ./collisionsTest [hashName] [opt]

|

||||

|

||||

list of hashNames : (...)

|

||||

list of hashNames: (...)

|

||||

|

||||

Optional parameters:

|

||||

--nbh=# : select nb of hashes to generate (25769803776 by default)

|

||||

--filter : activated the filter. Reduce memory usage for same nb of hashes. Slower.

|

||||

--threadlog=# : use 2^# threads

|

||||

--len=# : select length of input (255 bytes by default)

|

||||

--nbh=NB Select nb of hashes to generate (25769803776 by default)

|

||||

--filter Enable the filter. Slower, but reduces memory usage for same nb of hashes.

|

||||

--threadlog=NB Use 2^NB threads

|

||||

--len=NB Select length of input (255 bytes by default)

|

||||

```

|

||||

|

||||

#### Some advises on how to setup a collisions test

|

||||

@ -91,24 +91,24 @@ By requesting 14G, the expectation is that the program will automatically

|

||||

size the filter to 16 GB, and expect to store ~1G candidates,

|

||||

leaving enough room to breeze for the system.

|

||||

|

||||

The command line becomes :

|

||||

The command line becomes:

|

||||

```

|

||||

./collisionsTest --nbh=14G --filter NameOfHash

|

||||

```

|

||||

|

||||

#### Examples :

|

||||

|

||||

Here are a few results produced with this tester :

|

||||

Here are a few results produced with this tester:

|

||||

|

||||

| Algorithm | Input Len | Nb Hashes | Expected | Nb Collisions | Notes |

|

||||

| --- | --- | --- | --- | --- | --- |

|

||||

| __XXH3__ | 256 | 100 Gi | 312.5 | 326 | |

|

||||

| __XXH64__ | 256 | 100 Gi | 312.5 | 294 | |

|

||||

| __XXH128__ | 256 | 100 Gi | 0.0 | 0 | As a 128-bit hash, we expect XXH128 to generate 0 hash |

|

||||

| __XXH128__ | 256 | 100 Gi | 0.0 | 0 | As a 128-bit hash, we expect XXH128 to generate 0 collisions |

|

||||

| __XXH128__ low 64-bit | 512 | 100 Gi | 312.5 | 321 | |

|

||||

| __XXH128__ high 64-bit | 512 | 100 Gi | 312.5 | 325 | |

|

||||

|

||||

Test on small inputs :

|

||||

Test on small inputs:

|

||||

|

||||

| Algorithm | Input Len | Nb Hashes | Expected | Nb Collisions | Notes |

|

||||

| --- | --- | --- | --- | --- | --- |

|

||||

|

||||

@ -18,16 +18,16 @@

|

||||

* with this program; if not, write to the Free Software Foundation, Inc.,

|

||||

* 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA.

|

||||

*

|

||||

* You can contact the author at :

|

||||

* - xxHash homepage : http://www.xxhash.com

|

||||

* - xxHash source repository : https://github.com/Cyan4973/xxHash

|

||||

* You can contact the author at:

|

||||

* - xxHash homepage: http://www.xxhash.com

|

||||

* - xxHash source repository: https://github.com/Cyan4973/xxHash

|

||||

*/

|

||||

|

||||

#ifndef HASHES_H_1235465

|

||||

#define HASHES_H_1235465

|

||||

|

||||

#include <stddef.h> /* size_t */

|

||||

#include <stdint.h> /* uint64_t */

|

||||

#include <stddef.h> /* size_t */

|

||||

#include <stdint.h> /* uint64_t */

|

||||

#define XXH_INLINE_ALL /* XXH128_hash_t */

|

||||

#include "xxhash.h"

|

||||

|

||||

|

||||

@ -18,22 +18,22 @@

|

||||

* with this program; if not, write to the Free Software Foundation, Inc.,

|

||||

* 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301 USA.

|

||||

*

|

||||

* You can contact the author at :

|

||||

* - xxHash homepage : http://www.xxhash.com

|

||||

* - xxHash source repository : https://github.com/Cyan4973/xxHash

|

||||

* You can contact the author at:

|

||||

* - xxHash homepage: http://www.xxhash.com

|

||||

* - xxHash source repository: https://github.com/Cyan4973/xxHash

|

||||

*/

|

||||

|

||||

/*

|

||||

* The collision tester will generate 24 billions hashes (by default),

|

||||

* The collision tester will generate 24 billion hashes (by default),

|

||||

* and count how many collisions were produced by the 64-bit hash algorithm.

|

||||

* The optimal amount of collisions for 64-bit is ~18 collisions.

|

||||

* A good hash should be close to this figure.

|

||||

*

|

||||

* This program requires a lot of memory :

|

||||

* This program requires a lot of memory:

|

||||

* - Either store hash values directly => 192 GB

|

||||

* - Either use a filter :

|

||||

* - Either use a filter:

|

||||

* - 32 GB (by default) for the filter itself

|

||||

* - + ~14 GB for the list of hashes (depending on filter outcome)

|

||||

* - + ~14 GB for the list of hashes (depending on the filter's outcome)

|

||||

* Due to these memory constraints, it requires a 64-bit system.

|

||||

*/

|

||||

|

||||

@ -88,7 +88,8 @@ static void printHash(const void* table, size_t n, Htype_e htype)

|

||||

|

||||

/* === Generate Random unique Samples to hash === */

|

||||

|

||||

/* These functions will generate and update a sample to hash.

|

||||

/*

|

||||

* These functions will generate and update a sample to hash.

|

||||

* initSample() will fill a buffer with random bytes,

|

||||

* updateSample() will modify one slab in the input buffer.

|

||||

* updateSample() guarantees it will produce unique samples,

|

||||

@ -122,11 +123,11 @@ typedef enum { sf_slab5, sf_sparse } sf_genMode;

|

||||

|

||||

#ifdef SLAB5

|

||||

|

||||

/* Slab5 sample generation.

|

||||

* This algorithm generates unique inputs

|

||||

* flipping on average 16 bits per candidate.

|

||||

* It is generally much more friendly for most hash algorithms,

|

||||

* especially weaker ones, as it shuffles more the input.

|

||||

/*

|

||||

* Slab5 sample generation.

|

||||

* This algorithm generates unique inputs flipping on average 16 bits per candidate.

|

||||

* It is generally much more friendly for most hash algorithms, especially

|

||||

* weaker ones, as it shuffles more the input.

|

||||

* The algorithm also avoids overfitting the per4 or per8 ingestion patterns.

|

||||

*/

|

||||

|

||||

@ -193,12 +194,13 @@ static inline void update_sampleFactory(sampleFactory* sf)

|

||||

|

||||

#else

|

||||

|

||||

/* Sparse sample generation.

|

||||

/*

|

||||

* Sparse sample generation.

|

||||

* This is the default pattern generator.

|

||||

* It only flips one bit at a time (mostly).

|

||||

* Low hamming distance scenario is more difficult for weak hash algorithms.

|

||||

* Note that CRC are immune to this scenario,

|

||||

* since they are specifically designed to detect low hamming distances.

|

||||

* Note that CRC is immune to this scenario, since they are specifically

|

||||

* designed to detect low hamming distances.

|

||||

* Prefer the Slab5 pattern generator for collisions on CRC algorithms.

|

||||

*/

|

||||

|

||||

@ -297,13 +299,13 @@ static int updateBit(void* buffer, size_t* bitIdx, int level, size_t max)

|

||||

|

||||

flipbit(buffer, bitIdx[level]); /* erase previous bits */

|

||||

|

||||

if (bitIdx[level] < max-1) { /* simple case : go to next bit */

|

||||

if (bitIdx[level] < max-1) { /* simple case: go to next bit */

|

||||

bitIdx[level]++;

|

||||

flipbit(buffer, bitIdx[level]); /* set new bit */

|

||||

return 1;

|

||||

}

|

||||

|

||||

/* reached last bit : need to update a bit from lower level */

|

||||

/* reached last bit: need to update a bit from lower level */

|

||||

if (!updateBit(buffer, bitIdx, level-1, max-1)) return 0;

|

||||

bitIdx[level] = bitIdx[level-1] + 1;

|

||||

flipbit(buffer, bitIdx[level]); /* set new bit */

|

||||

@ -349,11 +351,12 @@ void free_Filter(Filter* bf)

|

||||

|

||||

#ifdef FILTER_1_PROBE

|

||||

|

||||

/* Attach hash to a slot

|

||||

* return : Nb of potential collision candidates detected

|

||||

* 0 : position not yet occupied

|

||||

* 2 : position previously occupied by a single candidate

|

||||

* 1 : position already occupied by multiple candidates

|

||||

/*

|

||||

* Attach hash to a slot

|

||||

* return: Nb of potential collision candidates detected

|

||||

* 0: position not yet occupied

|

||||

* 2: position previously occupied by a single candidate

|

||||

* 1: position already occupied by multiple candidates

|

||||

*/

|

||||

inline int Filter_insert(Filter* bf, int bflog, uint64_t hash)

|

||||

{

|

||||

@ -372,11 +375,12 @@ inline int Filter_insert(Filter* bf, int bflog, uint64_t hash)

|

||||

return addCandidates[existingCandidates];

|

||||

}

|

||||

|

||||

/* Check if provided 64-bit hash is a collision candidate

|

||||

/*

|

||||

* Check if provided 64-bit hash is a collision candidate

|

||||

* Requires the slot to be occupied by at least 2 candidates.

|

||||

* return >0 if hash is a collision candidate

|

||||

* 0 otherwise (slot unoccupied, or only one candidate)

|

||||

* note: slot unoccupied should not happen in this algorithm,

|

||||

* note: unoccupied slots should not happen in this algorithm,

|

||||

* since all hashes are supposed to have been inserted at least once.

|

||||

*/

|

||||

inline int Filter_check(const Filter* bf, int bflog, uint64_t hash)

|

||||

@ -392,19 +396,22 @@ inline int Filter_check(const Filter* bf, int bflog, uint64_t hash)

|

||||

|

||||

#else

|

||||

|

||||

/* 2-probes strategy,

|

||||

/*

|

||||

* 2-probes strategy,

|

||||

* more efficient at filtering candidates,

|

||||

* requires filter size to be > nb of hashes */

|

||||

* requires filter size to be > nb of hashes

|

||||

*/

|

||||

|

||||

#define MIN(a,b) ((a) < (b) ? (a) : (b))

|

||||

#define MAX(a,b) ((a) > (b) ? (a) : (b))

|

||||

|

||||

/* Attach hash to 2 slots

|

||||

* return : Nb of potential candidates detected

|

||||

* 0 : position not yet occupied

|

||||

* 2 : position previously occupied by a single candidate (at most)

|

||||

* 1 : position already occupied by multiple candidates

|

||||

*/

|

||||

/*

|

||||

* Attach hash to 2 slots

|

||||

* return: Nb of potential candidates detected

|

||||

* 0: position not yet occupied

|

||||

* 2: position previously occupied by a single candidate (at most)

|

||||

* 1: position already occupied by multiple candidates

|

||||

*/

|

||||

static inline int Filter_insert(Filter* bf, int bflog, uint64_t hash)

|

||||

{

|

||||

hash = avalanche64(hash);

|

||||

@ -437,13 +444,14 @@ static inline int Filter_insert(Filter* bf, int bflog, uint64_t hash)

|

||||

}

|

||||

|

||||

|

||||

/* Check if provided 64-bit hash is a collision candidate

|

||||

* Requires the slot to be occupied by at least 2 candidates.

|

||||

* return >0 if hash is collision candidate

|

||||

* 0 otherwise (slot unoccupied, or only one candidate)

|

||||

* note: slot unoccupied should not happen in this algorithm,

|

||||

* since all hashes are supposed to have been inserted at least once.

|

||||

*/

|

||||

/*

|

||||

* Check if provided 64-bit hash is a collision candidate

|

||||

* Requires the slot to be occupied by at least 2 candidates.

|

||||

* return >0 if hash is a collision candidate

|

||||

* 0 otherwise (slot unoccupied, or only one candidate)

|

||||

* note: unoccupied slots should not happen in this algorithm,

|

||||

* since all hashes are supposed to have been inserted at least once.

|

||||

*/

|

||||

static inline int Filter_check(const Filter* bf, int bflog, uint64_t hash)

|

||||

{

|

||||

hash = avalanche64(hash);

|

||||

@ -490,7 +498,7 @@ void update_indicator(uint64_t v, uint64_t total)

|

||||

}

|

||||

}

|

||||

|

||||

/* note : not thread safe */

|

||||

/* note: not thread safe */

|

||||

const char* displayDelay(double delay_s)

|

||||

{

|

||||

static char delayString[50];

|

||||

@ -568,7 +576,7 @@ typedef struct {

|

||||

uint64_t maskSelector;

|

||||

size_t sampleSize;

|

||||

uint64_t prngSeed;

|

||||

int filterLog; /* <0 = disable filter; 0= auto-size; */

|

||||

int filterLog; /* <0 = disable filter; 0 = auto-size; */

|

||||

int hashID;

|

||||

int display;

|

||||

int nbThreads;

|

||||

@ -608,7 +616,7 @@ static int isHighEqual(void* hTablePtr, size_t index1, size_t index2, Htype_e ht

|

||||

return (h1 >> rShift) == (h2 >> rShift);

|

||||

}

|

||||

|

||||

/* assumption : (htype*)hTablePtr[index] is valid */

|

||||

/* assumption: (htype*)hTablePtr[index] is valid */

|

||||

static void addHashCandidate(void* hTablePtr, UniHash h, Htype_e htype, size_t index)

|

||||

{

|

||||

if ((htype == ht64) || (htype == ht32)) {

|

||||

@ -668,12 +676,12 @@ static size_t search_collisions(

|

||||

|

||||

if (filter) {

|

||||

time_t const filterTBegin = time(NULL);

|

||||

DISPLAY(" create filter (%i GB) \n", (int)(bfsize >> 30));

|

||||

DISPLAY(" Creating filter (%i GB) \n", (int)(bfsize >> 30));

|

||||

bf = create_Filter(bflog);

|

||||

if (!bf) EXIT("not enough memory for filter");

|

||||

|

||||

|

||||

DISPLAY(" generate %llu hashes from samples of %u bytes \n",

|

||||

DISPLAY(" Generate %llu hashes from samples of %u bytes \n",

|

||||

(unsigned long long)totalH, (unsigned)sampleSize);

|

||||

nbPresents = 0;

|

||||

|

||||

@ -689,7 +697,7 @@ static size_t search_collisions(

|

||||

}

|

||||

|

||||

if (nbPresents==0) {

|

||||

DISPLAY(" analysis completed : no collision detected \n");

|

||||

DISPLAY(" Analysis completed: No collision detected \n");

|

||||

if (param.resultPtr) param.resultPtr->nbCollisions = 0;

|

||||

free_Filter(bf);

|

||||

free_sampleFactory(sf);

|

||||

@ -697,18 +705,18 @@ static size_t search_collisions(

|

||||

}

|

||||

|

||||

{ double const filterDelay = difftime(time(NULL), filterTBegin);

|

||||

DISPLAY(" generation and filter completed in %s, detected up to %llu candidates \n",

|

||||

DISPLAY(" Generation and filter completed in %s, detected up to %llu candidates \n",

|

||||

displayDelay(filterDelay), (unsigned long long) nbPresents);

|

||||

} }

|

||||

|

||||

|

||||

/* === store hash candidates : duplicates will be present here === */

|

||||

/* === store hash candidates: duplicates will be present here === */

|

||||

|

||||

time_t const storeTBegin = time(NULL);

|

||||

size_t const hashByteSize = (htype == ht128) ? 16 : 8;

|

||||

size_t const tableSize = (nbPresents+1) * hashByteSize;

|

||||

assert(tableSize > nbPresents); /* check tableSize calculation overflow */

|

||||

DISPLAY(" store hash candidates (%i MB) \n", (int)(tableSize >> 20));

|

||||

DISPLAY(" Storing hash candidates (%i MB) \n", (int)(tableSize >> 20));

|

||||

|

||||

/* Generate and store hashes */

|

||||

void* const hashCandidates = malloc(tableSize);

|

||||

@ -733,20 +741,20 @@ static size_t search_collisions(

|

||||

}

|

||||

}

|

||||

if (nbCandidates < nbPresents) {

|

||||

/* try to mitigate gnuc_quicksort behavior, by reducing allocated memory,

|

||||

/* Try to mitigate gnuc_quicksort behavior, by reducing allocated memory,

|

||||

* since gnuc_quicksort uses a lot of additional memory for mergesort */

|

||||

void* const checkPtr = realloc(hashCandidates, nbCandidates * hashByteSize);

|

||||

assert(checkPtr != NULL);

|

||||

assert(checkPtr == hashCandidates); /* simplification : since we are reducing size,

|

||||

assert(checkPtr == hashCandidates); /* simplification: since we are reducing the size,

|

||||

* we hope to keep the same ptr position.

|

||||

* Otherwise, hashCandidates must be mutable */

|

||||

DISPLAY(" list of hash reduced to %u MB from %u MB (saved %u MB) \n",

|

||||

* Otherwise, hashCandidates must be mutable. */

|

||||

DISPLAY(" List of hashes reduced to %u MB from %u MB (saved %u MB) \n",

|

||||

(unsigned)((nbCandidates * hashByteSize) >> 20),

|

||||

(unsigned)(tableSize >> 20),

|

||||

(unsigned)((tableSize - (nbCandidates * hashByteSize)) >> 20) );

|

||||

}

|

||||

double const storeTDelay = difftime(time(NULL), storeTBegin);

|

||||

DISPLAY(" stored %llu hash candidates in %s \n",

|

||||

DISPLAY(" Stored %llu hash candidates in %s \n",

|

||||

(unsigned long long) nbCandidates, displayDelay(storeTDelay));

|

||||

free_Filter(bf);

|

||||

free_sampleFactory(sf);

|

||||

@ -754,7 +762,7 @@ static size_t search_collisions(

|

||||

|

||||

/* === step 3 : look for duplicates === */

|

||||

time_t const sortTBegin = time(NULL);

|

||||

DISPLAY(" sorting candidates... ");

|

||||

DISPLAY(" Sorting candidates... ");

|

||||

fflush(NULL);

|

||||

if ((htype == ht64) || (htype == ht32)) {

|

||||

sort64(hashCandidates, nbCandidates); /* using C++ sort, as it's faster than C stdlib's qsort,

|

||||

@ -764,16 +772,16 @@ static size_t search_collisions(

|

||||

sort128(hashCandidates, nbCandidates); /* sort with custom comparator */

|

||||

}

|

||||

double const sortTDelay = difftime(time(NULL), sortTBegin);

|

||||

DISPLAY(" completed in %s \n", displayDelay(sortTDelay));

|

||||

DISPLAY(" Completed in %s \n", displayDelay(sortTDelay));

|

||||

|

||||

/* scan and count duplicates */

|

||||

time_t const countBegin = time(NULL);

|

||||

DISPLAY(" looking for duplicates : ");

|

||||

DISPLAY(" Looking for duplicates: ");

|

||||

fflush(NULL);

|

||||

size_t collisions = 0;

|

||||

for (size_t n=1; n<nbCandidates; n++) {

|

||||

if (isEqual(hashCandidates, n, n-1, htype)) {

|

||||

printf("collision : ");

|

||||

printf("collision: ");

|

||||

printHash(hashCandidates, n, htype);

|

||||

printf(" / ");

|

||||

printHash(hashCandidates, n-1, htype);

|

||||

@ -800,17 +808,17 @@ static size_t search_collisions(

|

||||

} }

|

||||

double const collisionRatio = (double)HBits_collisions / expectedCollisions;

|

||||

if (collisionRatio > 2.0) DISPLAY("WARNING !!! ===> ");

|

||||

DISPLAY(" high %i bits : %zu collision (%.1f expected) : x%.2f \n",

|

||||

DISPLAY(" high %i bits: %zu collision (%.1f expected): x%.2f \n",

|

||||

nbHBits, HBits_collisions, expectedCollisions, collisionRatio);

|

||||

if (collisionRatio > worstRatio) {

|

||||

worstNbHBits = nbHBits;

|

||||

worstRatio = collisionRatio;

|

||||

} } }

|

||||

DISPLAY("Worst collision ratio at %i high bits : x%.2f \n",

|

||||

DISPLAY("Worst collision ratio at %i high bits: x%.2f \n",

|

||||

worstNbHBits, worstRatio);

|

||||

}

|

||||

double const countDelay = difftime(time(NULL), countBegin);

|

||||

DISPLAY(" completed in %s \n", displayDelay(countDelay));

|

||||

DISPLAY(" Completed in %s \n", displayDelay(countDelay));

|

||||

|

||||

/* clean and exit */

|

||||

free (hashCandidates);

|

||||

@ -863,7 +871,7 @@ void time_collisions(searchCollisions_parameters param)

|

||||

size_t const programBytesSelf = getProcessMemUsage(0);

|

||||

size_t const programBytesChildren = getProcessMemUsage(1);

|

||||

DISPLAY("\n\n");

|

||||

DISPLAY("===> found %llu collisions (x%.2f, %.1f expected) in %s\n",

|

||||

DISPLAY("===> Found %llu collisions (x%.2f, %.1f expected) in %s\n",

|

||||

(unsigned long long)collisions,

|

||||

(double)collisions / targetColls,

|

||||

targetColls,

|

||||

@ -883,9 +891,10 @@ void MT_searchCollisions(void* payload)

|

||||

|

||||

/* === Command Line === */

|

||||

|

||||

/*! readU64FromChar() :

|

||||

* allows and interprets K, KB, KiB, M, MB and MiB suffix.

|

||||

* Will also modify `*stringPtr`, advancing it to position where it stopped reading.

|

||||

/*!

|

||||

* readU64FromChar():

|

||||

* Allows and interprets K, KB, KiB, M, MB and MiB suffix.

|

||||

* Will also modify `*stringPtr`, advancing it to the position where it stopped reading.

|

||||

*/

|

||||

static uint64_t readU64FromChar(const char** stringPtr)

|

||||

{

|

||||

@ -917,13 +926,15 @@ static uint64_t readU64FromChar(const char** stringPtr)

|

||||

}

|

||||

|

||||

|

||||

/** longCommandWArg() :

|

||||

* check if *stringPtr is the same as longCommand.

|

||||

* If yes, @return 1 and advances *stringPtr to the position which immediately follows longCommand.

|

||||

/**

|

||||

* longCommandWArg():

|

||||

* Checks if *stringPtr is the same as longCommand.

|

||||

* If yes, @return 1 and advances *stringPtr to the position which immediately follows longCommand.

|

||||

* @return 0 and doesn't modify *stringPtr otherwise.

|

||||

*/

|

||||

static int longCommandWArg(const char** stringPtr, const char* longCommand)

|

||||

{

|

||||

assert(longCommand); assert(stringPtr); assert(*stringPtr);

|

||||

size_t const comSize = strlen(longCommand);

|

||||

int const result = !strncmp(*stringPtr, longCommand, comSize);

|

||||

if (result) *stringPtr += comSize;

|

||||

@ -933,34 +944,36 @@ static int longCommandWArg(const char** stringPtr, const char* longCommand)

|

||||

|

||||

#include "pool.h"

|

||||

|

||||

/* As some hashes use different algorithms depending on input size,

|

||||

/*

|

||||

* As some hashes use different algorithms depending on input size,

|

||||

* it can be necessary to test multiple input sizes

|

||||

* to paint an accurate picture on collision performance */

|

||||

* to paint an accurate picture of collision performance

|

||||

*/

|

||||

#define SAMPLE_SIZE_DEFAULT 255

|

||||

#define HASHFN_ID_DEFAULT 0

|

||||

|

||||

void help(const char* exeName)

|

||||

{

|

||||

printf("usage: %s [hashName] [opt] \n\n", exeName);

|

||||

printf("list of hashNames : ");

|

||||

printf("list of hashNames:");

|

||||

printf("%s ", hashfnTable[0].name);

|

||||

for (int i=1; i < HASH_FN_TOTAL; i++) {

|

||||

printf(", %s ", hashfnTable[i].name);

|

||||

}

|

||||

printf(" \n");

|

||||

printf("default hashName is %s \n", hashfnTable[HASHFN_ID_DEFAULT].name);

|

||||

printf("Default hashName is %s\n", hashfnTable[HASHFN_ID_DEFAULT].name);

|

||||

|

||||

printf(" \n");

|

||||

printf("Optional parameters: \n");

|

||||

printf("--nbh=# : select nb of hashes to generate (%llu by default) \n", (unsigned long long)select_nbh(64));

|

||||

printf("--filter : activated the filter. Reduce memory usage for same nb of hashes. Slower. \n");

|

||||

printf("--threadlog=# : use 2^# threads \n");

|

||||

printf("--len=# : select length of input (%i bytes by default) \n", SAMPLE_SIZE_DEFAULT);

|

||||